Originally published on intellyx.com on June 27, 2024.

BrainBlog for Amberflo by Jason Bloomberg

In the first article in this series, Intellyx Principal Analyst Jason English introduced the concept of composite AI: a supply chain consisting of multiple AI suppliers, with multiple models and multiple training data sets working together in a loosely-coupled fashion, based on the right fit for the job.

He explains how metering such composite AI-based solutions is central to the monetization of such applications.

In the second article in the series, Intellyx Principal Analyst Eric Newcomer explained usage-based generative AI (genAI) pricing, and how different genAI tools each have different pricing models.

Regardless of whether a genAI-based offering leverages one or many models, monetization of any technical solution boils down to one incontrovertible fact: revenues must exceed costs.

Given how new and untested today’s genAI-based solutions are, both sides of this equation can be difficult to predict as solutions scale to meet demand, especially when the solution depends upon multiple language models.

The Many Reasons to Combine Multiple Language Models

Why would an organization build a composite AI-based application in the first place? And given all the complexities of the various pricing models and usage patterns, how should organizations go about metering and monetizing multiple language models? Here are some likely scenarios.

Combining the best features of multiple publicly available large language models (LLMs) – As Eric Newcomer pointed out, each model on the market has its own strengths, based on both its training data as well as the construction of the model itself. Most of today’s genAI solutions combine LLMs to leverage their respective strengths.

Price arbitrage – Some organizations shift workloads from one model to another to get the best deal at the time. However, because each model works differently, such arbitrage can be more complicated (and thus expensive) than, say, price arbitrage across public cloud providers. Such arbitrage is impossible without genAI metering.

Mixing DIY and public LLMs – An organization building its own ‘do it yourself’ LLM can achieve market-leading differentiation, but creating an LLM from scratch is a Herculean effort.

Even if your organization has such ambitions, it may not want to put all its eggs in the DIY model basket. Instead, combine internally constructed models with public ones to manage costs and augment the abilities of a single model.

Leveraging models of different sizes – Much of the buzz around genAI centers on LLMs – but smaller language models can provide complementary capabilities to LLMs at a lower cost.

Smaller language models are more likely to be task-specific than the more general purpose LLMs. They require fewer computational resources, making them more cost-effective and accessible, especially for DIY scenarios.

Crafting the right usage and pricing models for combinations of language models of different sizes, therefore, can be essential for delivering a cost-effective AI-based offering.

Leveraging domain-specific models – the general-purpose models like the GPT family, LlaMa, and Falcon may get most of the press, but domain-specific models that their creators have trained and tuned for industry or use case-specific purposes are coming to market all the time.

Domain-specific models are typically smaller than the general-purpose ones, and thus can be less expensive to deploy. A particular genAI solution, however, is likely to combine both types of models to deliver a differentiated capability to the market, thus requiring careful metering and billing capabilities.

The Amberflo Solution

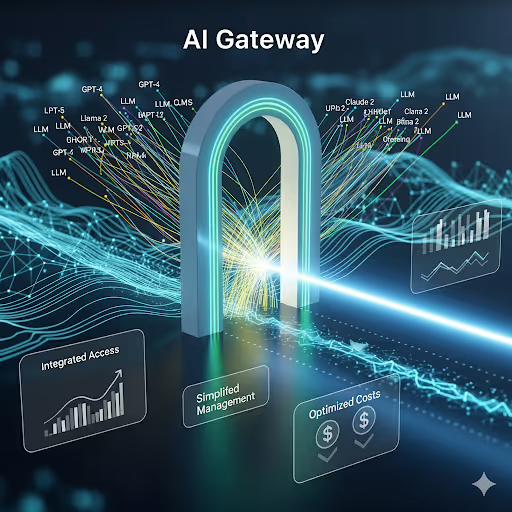

Amberflo has updated its cloud metering and monetization solution for genAI-based applications. The platform provides usage metering, cost tracking, and customer billing capabilities for any combination of LLMs that a customer would like to deploy.

Amberflo is well-suited for such metering and monetization of multiple LLMs. With Amberflo, operators can dynamically switch models and versions of LLMs, adding custom metrics as needed.

Operators can also track per-customer, per-team, or per-user LLM usage across different models, correlating model usage costs to implement customer-friendly, yet profitable pricing models.

The Intellyx Take

What all the various composite AI scenarios have in common is their complexity as compared to single model-based solutions – and with such complexity comes cost.

Given the massive data and processing requirements of LLMs, costs can easily run away from you, especially in composite scenarios. Transparent billing is equally important for complex composite AI scenarios, as your customers require control over their own costs.

While you need to pass your costs on to your customers, you must also ensure you give them value for their dollar. Without adequate usage metering and billing, you and your customers are working in the dark.

Copyright © Intellyx BV. Amberflo is an Intellyx customer. None of the other organizations mentioned in this article is an Intellyx customer. Intellyx retains final editorial control of this article. No AI was used to write this article.

.svg)